[[{“value”:”

Alibaba has released Qwen3-Max, a trillion-parameter Mixture-of-Experts (MoE) model positioned as its most capable foundation model to date, with an immediate public on-ramp via Qwen Chat and Alibaba Cloud’s Model Studio API. The launch moves Qwen’s 2025 cadence from preview to production and centers on two variants: Qwen3-Max-Instruct for standard reasoning/coding tasks and Qwen3-Max-Thinking for tool-augmented “agentic” workflows.

What’s new at the model level?

- Scale & architecture: Qwen3-Max crosses the 1-trillion-parameter mark with an MoE design (sparse activation per token). Alibaba positions the model as its largest and most capable to date; public briefings and coverage consistently describe it as a 1T-parameter class system rather than another mid-scale refresh.

- Training/runtime posture: Qwen3-Max uses a sparse Mixture-of-Experts design and was pretrained on ~36T tokens (~2× Qwen2.5). The corpus skews toward multilingual, coding, and STEM/reasoning data. Post-training follows Qwen3’s four-stage recipe: long CoT cold-start → reasoning-focused RL → thinking/non-thinking fusion → general-domain RL. Alibaba confirms >1T parameters for Max; treat token counts/routing as team-reported until a formal Max tech report is published.

- Access: Qwen Chat showcases the general-purpose UX, while Model Studio exposes inference and “thinking mode” toggles (notably,

incremental_output=trueis required for Qwen3 thinking models). Model listings and pricing sit under Model Studio with regioned availability.

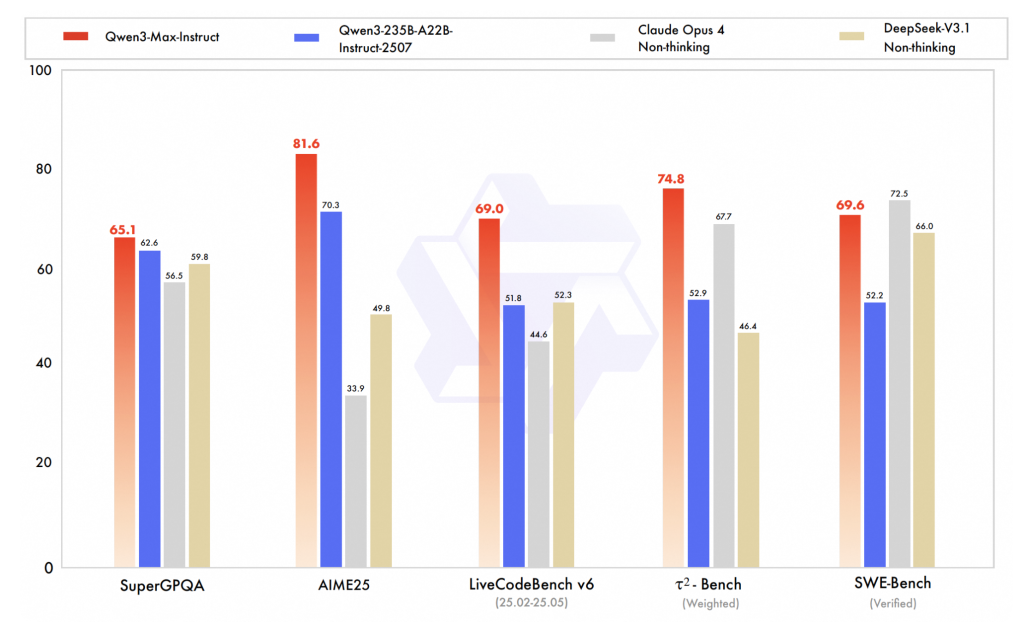

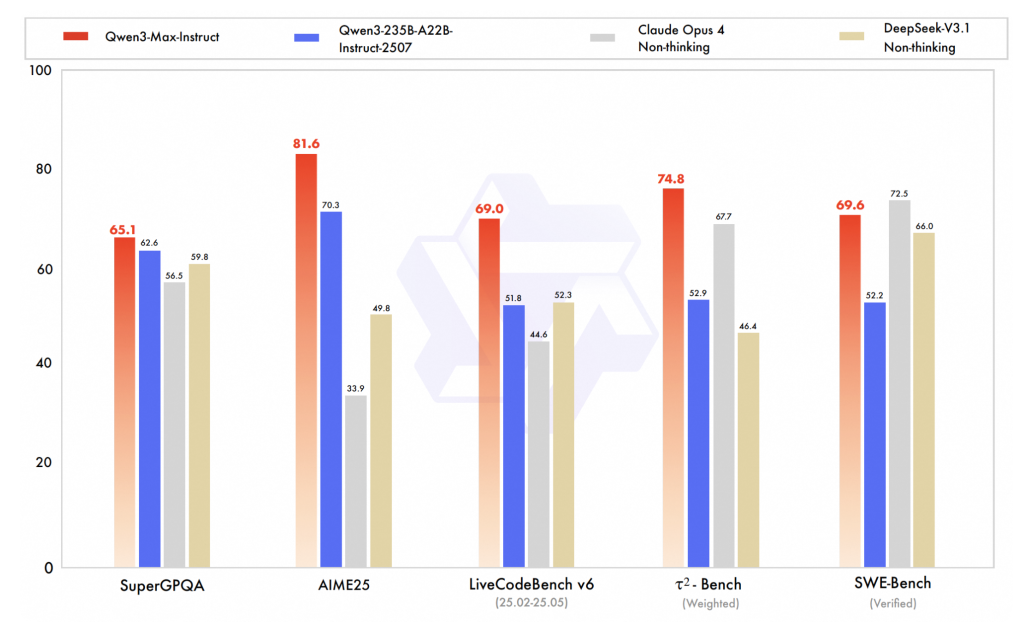

Benchmarks: coding, agentic control, math

- Coding (SWE-Bench Verified). Qwen3-Max-Instruct is reported at 69.6 on SWE-Bench Verified. That places it above some non-thinking baselines (e.g., DeepSeek V3.1 non-thinking) and slightly below Claude Opus 4 non-thinking in at least one roundup. Treat these as point-in-time numbers; SWE-Bench evaluations move quickly with harness updates.

- Agentic tool use (Tau2-Bench). Qwen3-Max posts 74.8 on Tau2-Bench—an agent/tool-calling evaluation—beating named peers in the same report. Tau2 is designed to test decision-making and tool routing, not just text accuracy, so gains here are meaningful for workflow automation.

- Math & advanced reasoning (AIME25, etc.). The Qwen3-Max-Thinking track (with tool use and a “heavy” runtime configuration) is described as near-perfect on key math benchmarks (e.g., AIME25) in multiple secondary sources and earlier preview coverage. Until an official technical report drops, treat “100%” claims as vendor-reported or community-replicated, not peer-reviewed.

Why two tracks—Instruct vs. Thinking?

Instruct targets conventional chat/coding/reasoning with tight latency, while Thinking enables longer deliberation traces and explicit tool calls (retrieval, code execution, browsing, evaluators), aimed at higher-reliability “agent” use cases. Critically, Alibaba’s API docs formalize the runtime switch: Qwen3 thinking models only operate with streaming incremental output enabled; commercial defaults are false, so callers must explicitly set it. This is a small but consequential contract detail if you’re instrumenting tools or chain-of-thought-like rollouts.

How to reason about the gains (signal vs. noise)?

- Coding: A 60–70 SWE-Bench Verified score range typically reflects non-trivial repository-level reasoning and patch synthesis under evaluation harness constraints (e.g., environment setup, flaky tests). If your workloads hinge on repo-scale code changes, these deltas matter more than single-file coding toys.

- Agentic: Tau2-Bench emphasizes multi-tool planning and action selection. Improvements here usually translate into fewer brittle hand-crafted policies in production agents, provided your tool APIs and execution sandboxes are robust.

- Math/verification: “Near-perfect” math numbers from heavy/thinky modes underscore the value of extended deliberation plus tools (calculators, validators). Portability of those gains to open-ended tasks depends on your evaluator design and guardrails.

Summary

Qwen3-Max is not a teaser—it’s a deployable 1T-parameter MoE with documented thinking-mode semantics and reproducible access paths (Qwen Chat, Model Studio). Treat day-one benchmark wins as directionally strong but continue local evals; the hard, verifiable facts are scale (≈36T tokens, >1T params) and the API contract for tool-augmented runs (incremental_output=true). For teams building coding and agentic systems, this is ready for hands-on trials and internal gating against SWE-/Tau2-style suites.

Check out the Technical details, API and Qwen Chat. Feel free to check out our GitHub Page for Tutorials, Codes and Notebooks. Also, feel free to follow us on Twitter and don’t forget to join our 100k+ ML SubReddit and Subscribe to our Newsletter.

The post Alibaba’s Qwen3-Max: Production-Ready Thinking Mode, 1T+ Parameters, and Day-One Coding/Agentic Bench Signals appeared first on MarkTechPost.

“}]] [[{“value”:”Alibaba has released Qwen3-Max, a trillion-parameter Mixture-of-Experts (MoE) model positioned as its most capable foundation model to date, with an immediate public on-ramp via Qwen Chat and Alibaba Cloud’s Model Studio API. The launch moves Qwen’s 2025 cadence from preview to production and centers on two variants: Qwen3-Max-Instruct for standard reasoning/coding tasks and Qwen3-Max-Thinking for

The post Alibaba’s Qwen3-Max: Production-Ready Thinking Mode, 1T+ Parameters, and Day-One Coding/Agentic Bench Signals appeared first on MarkTechPost.”}]]  Read More Agentic AI, AI Shorts, Applications, Artificial Intelligence, Editors Pick, Language Model, Machine Learning, New Releases, Staff, Tech News, Technology

Read More Agentic AI, AI Shorts, Applications, Artificial Intelligence, Editors Pick, Language Model, Machine Learning, New Releases, Staff, Tech News, Technology