[[{“value”:”

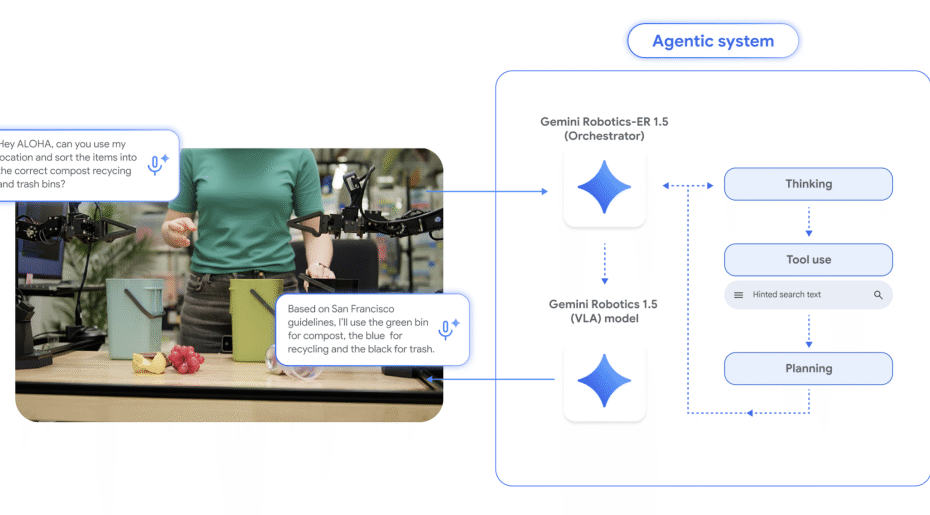

Can a single AI stack plan like a researcher, reason over scenes, and transfer motions across different robots—without retraining from scratch? Google DeepMind’s Gemini Robotics 1.5 says yes, by splitting embodied intelligence into two models: Gemini Robotics-ER 1.5 for high-level embodied reasoning (spatial understanding, planning, progress/success estimation, tool-use) and Gemini Robotics 1.5 for low-level visuomotor control. The system targets long-horizon, real-world tasks (e.g., multi-step packing, waste sorting with local rules) and introduces motion transfer to reuse data across heterogeneous platforms.

What actually is the stack?

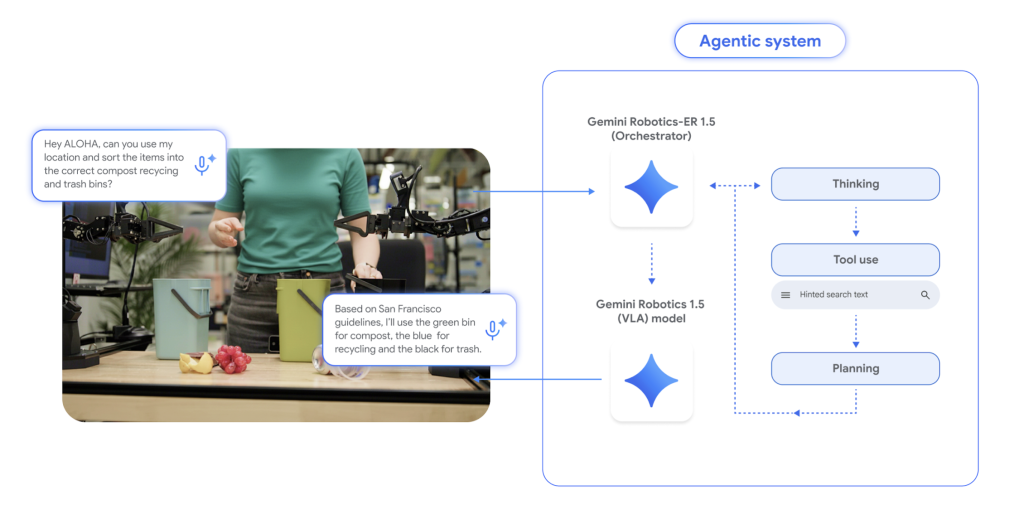

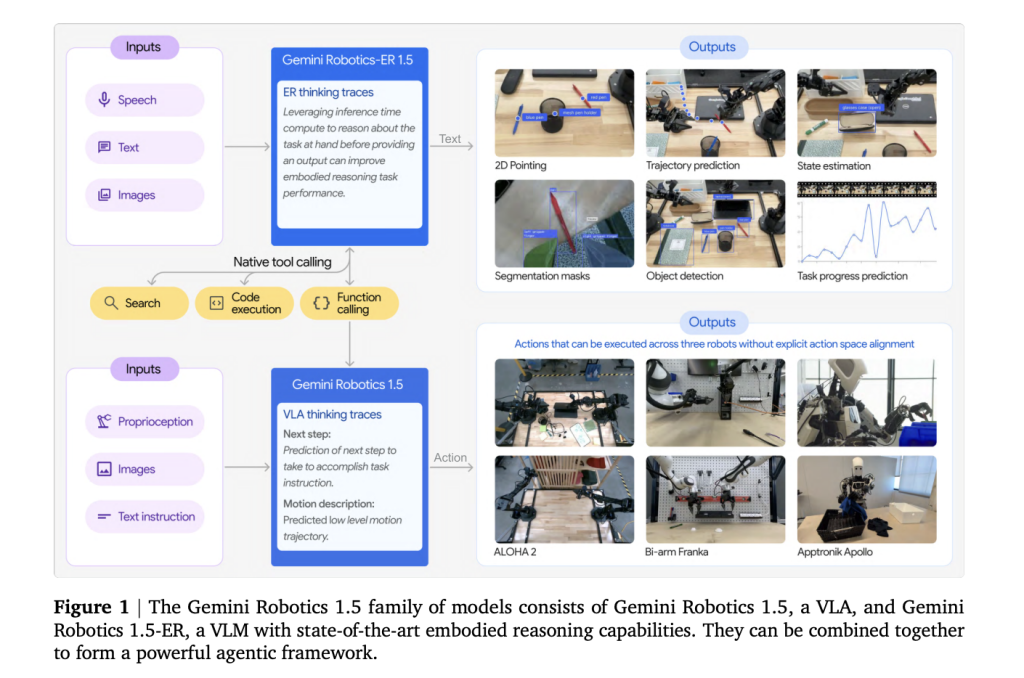

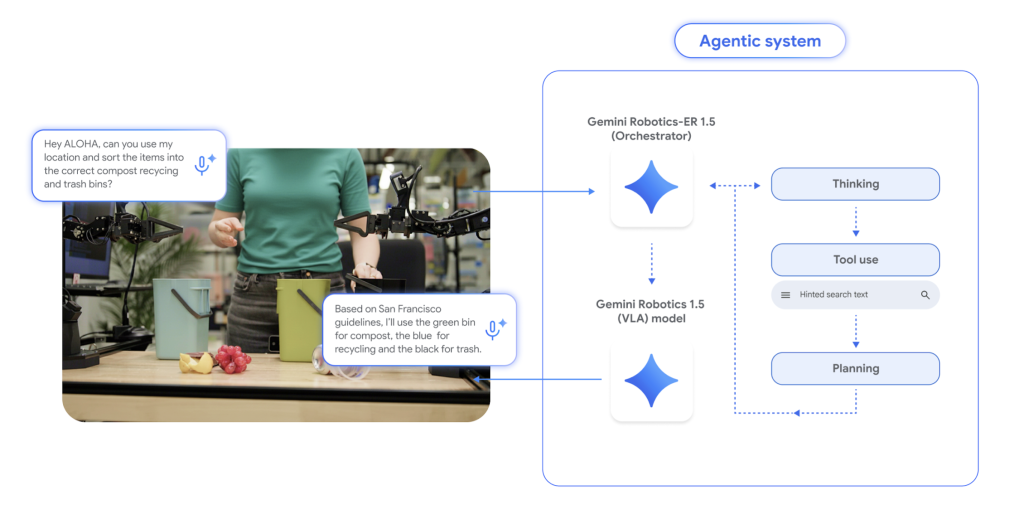

- Gemini Robotics-ER 1.5 (reasoner/orchestrator): A multimodal planner that ingests images/video (and optionally audio), grounds references via 2D points, tracks progress, and invokes external tools (e.g., web search or local APIs) to fetch constraints before issuing sub-goals. It’s available via the Gemini API in Google AI Studio.

- Gemini Robotics 1.5 (VLA controller): A vision-language-action model that converts instructions and percepts into motor commands, producing explicit “think-before-act” traces to decompose long tasks into short-horizon skills. Availability is limited to selected partners during the initial rollout.

Why split cognition from control?

Earlier end-to-end VLAs (Vision-Language-Action) struggle to plan robustly, verify success, and generalize across embodiments. Gemini Robotics 1.5 isolates those concerns: Gemini Robotics-ER 1.5 handles deliberation (scene reasoning, sub-goaling, success detection), while the VLA specializes in execution (closed-loop visuomotor control). This modularity improves interpretability (visible internal traces), error recovery, and long-horizon reliability.

Motion Transfer across embodiments

A core contribution is Motion Transfer (MT): training the VLA on a unified motion representation built from heterogeneous robot data—ALOHA, bi-arm Franka, and Apptronik Apollo—so skills learned on one platform can zero-shot transfer to another. This reduces per-robot data collection and narrows sim-to-real gaps by reusing cross-embodiment priors.

Quantitative signals

The research team showcased controlled A/B comparisons on real hardware and aligned MuJoCo scenes. This includes:

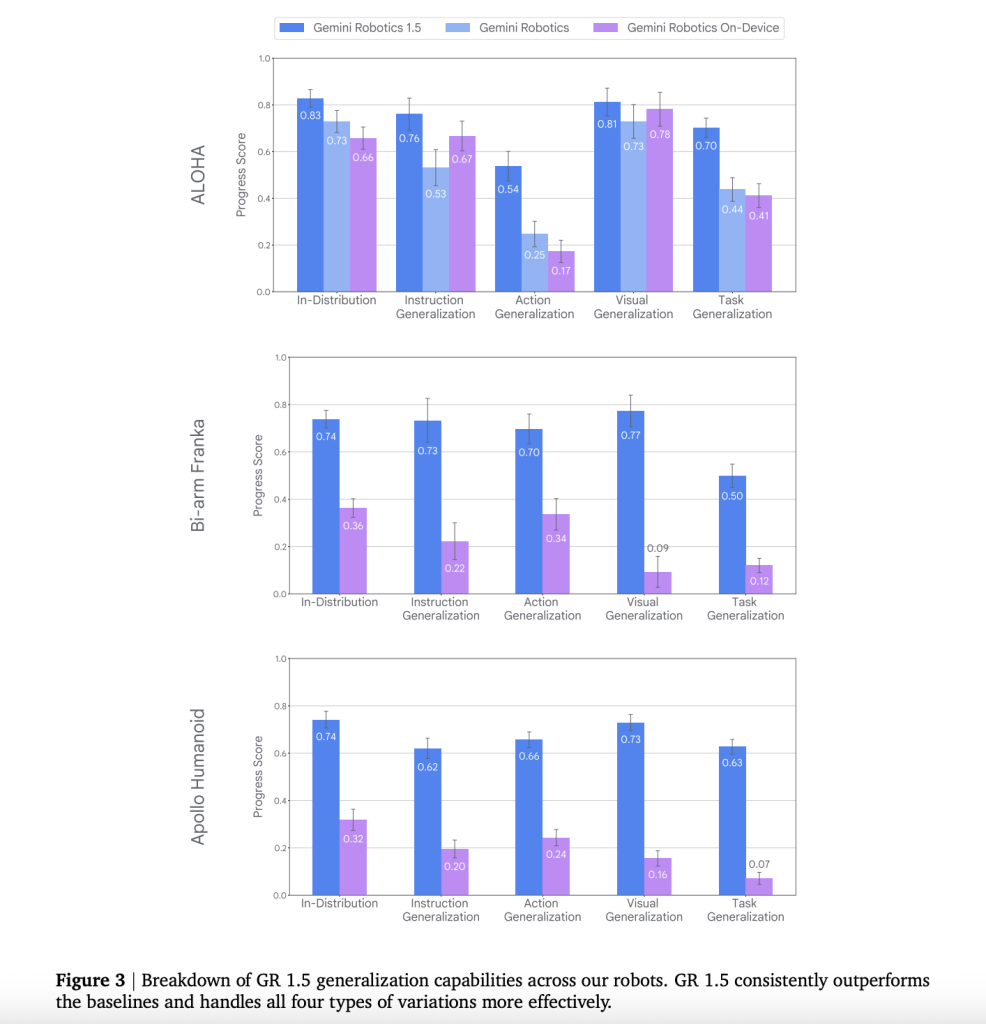

- Generalization: Robotics 1.5 surpasses prior Gemini Robotics baselines in instruction following, action generalization, visual generalization, and task generalization across the three platforms.

- Zero-shot cross-robot skills: MT yields measurable gains in progress and success when transferring skills across embodiments (e.g., Franka→ALOHA, ALOHA→Apollo), rather than merely improving partial progress.

- “Thinking” improves acting: Enabling VLA thought traces increases long-horizon task completion and stabilizes mid-rollout plan revisions.

- End-to-end agent gains: Pairing Gemini Robotics-ER 1.5 with the VLA agent substantially improves progress on multi-step tasks (e.g., desk organization, cooking-style sequences) versus a Gemini-2.5-Flash-based baseline orchestrator.

Safety and evaluation

DeepMind research team highlights layered controls: policy-aligned dialog/planning, safety-aware grounding (e.g., not pointing to hazardous objects), low-level physical limits, and expanded evaluation suites (e.g., ASIMOV/ASIMOV-style scenario testing and auto red-teaming to elicit edge-case failures). The goal is to catch hallucinated affordances or nonexistent objects before actuation.

Competitive/industry context

Gemini Robotics 1.5 is a shift from “single-instruction” robotics toward agentic, multi-step autonomy with explicit web/tool use and cross-platform learning, a capability set relevant to consumer and industrial robotics. Early partner access centers on established robotics vendors and humanoid platforms.

Key Takeaways

- Two-model architecture (ER

VLA): Gemini Robotics-ER 1.5 handles embodied reasoning—spatial grounding, planning, success/progress estimation, tool calls—while Robotics 1.5 is the vision-language-action executor that issues motor commands.

VLA): Gemini Robotics-ER 1.5 handles embodied reasoning—spatial grounding, planning, success/progress estimation, tool calls—while Robotics 1.5 is the vision-language-action executor that issues motor commands. - “Think-before-act” control: The VLA produces explicit intermediate reasoning/traces during execution, improving long-horizon decomposition and mid-task adaptation.

- Motion Transfer across embodiments: A single VLA checkpoint reuses skills across heterogeneous robots (ALOHA, bi-arm Franka, Apptronik Apollo), enabling zero-/few-shot cross-robot execution rather than per-platform retraining.

- Tool-augmented planning: ER 1.5 can invoke external tools (e.g., web search) to fetch constraints, then condition plans—e.g., packing after checking local weather or applying city-specific recycling rules.

- Quantified improvements over prior baselines: The tech report documents higher instruction/action/visual/task generalization and better progress/success on real hardware and aligned simulators; results cover cross-embodiment transfers and long-horizon tasks.

- Availability and access: ER 1.5 is available via the Gemini API (Google AI Studio) with docs, examples, and preview knobs; Robotics 1.5 (VLA) is limited to select partners with a public waitlist.

- Safety & evaluation posture: DeepMind highlights layered safeguards (policy-aligned planning, safety-aware grounding, physical limits) and an upgraded ASIMOV benchmark plus adversarial evaluations to probe risky behaviors and hallucinated affordances.

Summary

Gemini Robotics 1.5 operationalizes a clean separation of embodied reasoning and control, adds motion transfer to recycle data across robots, and showcases the reasoning surface (point grounding, progress/success estimation, tool calls) to developers via the Gemini API. For teams building real-world agents, the design reduces per-platform data burden and strengthens long-horizon reliability—while keeping safety in scope with dedicated test suites and guardrails.

Check out the Paper and Technical details. Feel free to check out our GitHub Page for Tutorials, Codes and Notebooks. Also, feel free to follow us on Twitter and don’t forget to join our 100k+ ML SubReddit and Subscribe to our Newsletter.

The post Gemini Robotics 1.5: DeepMind’s ER↔VLA Stack Brings Agentic Robots to the Real World appeared first on MarkTechPost.

“}]] [[{“value”:”Can a single AI stack plan like a researcher, reason over scenes, and transfer motions across different robots—without retraining from scratch? Google DeepMind’s Gemini Robotics 1.5 says yes, by splitting embodied intelligence into two models: Gemini Robotics-ER 1.5 for high-level embodied reasoning (spatial understanding, planning, progress/success estimation, tool-use) and Gemini Robotics 1.5 for low-level visuomotor

The post Gemini Robotics 1.5: DeepMind’s ER↔VLA Stack Brings Agentic Robots to the Real World appeared first on MarkTechPost.”}]]  Read More AI Paper Summary, AI Shorts, Applications, Artificial Intelligence, Editors Pick, Language Model, Robotics, Tech News, Technology

Read More AI Paper Summary, AI Shorts, Applications, Artificial Intelligence, Editors Pick, Language Model, Robotics, Tech News, Technology

VLA): Gemini Robotics-ER 1.5 handles embodied reasoning—spatial grounding, planning, success/progress estimation, tool calls—while Robotics 1.5 is the vision-language-action executor that issues motor commands.

VLA): Gemini Robotics-ER 1.5 handles embodied reasoning—spatial grounding, planning, success/progress estimation, tool calls—while Robotics 1.5 is the vision-language-action executor that issues motor commands.