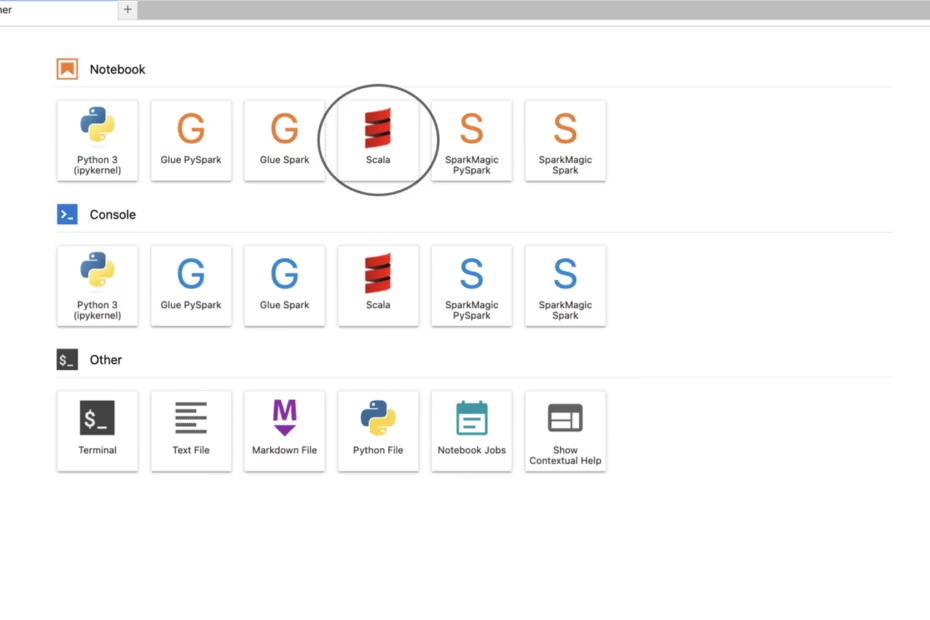

Scala development in Amazon SageMaker Studio with Almond kernel Varun Rajan Artificial Intelligence

[[{“value”:” Scala stands out as a versatile programming language that combines object-oriented and functional programming approaches. By running on the Java Virtual Machine (JVM), it maintains seamless compatibility with Java libraries while offering a concise and scalable development experience. The language has distinguished itself in… Read More »Scala development in Amazon SageMaker Studio with Almond kernel Varun Rajan Artificial Intelligence