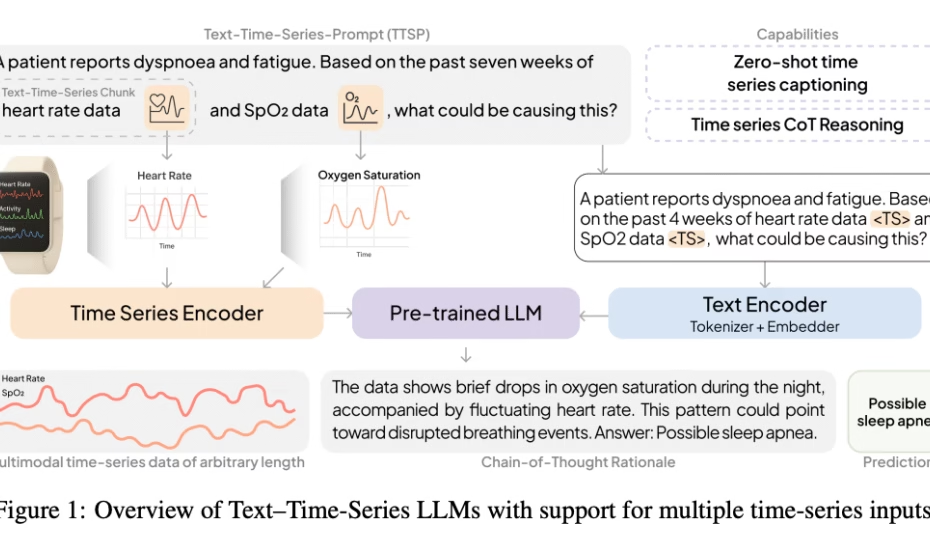

Meet OpenTSLM: A Family of Time-Series Language Models (TSLMs) Revolutionizing Medical Time-Series Analysis Jean-marc Mommessin Artificial Intelligence Category – MarkTechPost

[[{“value”:” A significant development is set to transform AI in healthcare. Researchers at Stanford University, in collaboration with ETH Zurich and tech leaders including Google Research and Amazon, have introduced OpenTSLM, a novel family of Time-Series Language Models (TSLMs). This breakthrough addresses a critical limitation… Read More »Meet OpenTSLM: A Family of Time-Series Language Models (TSLMs) Revolutionizing Medical Time-Series Analysis Jean-marc Mommessin Artificial Intelligence Category – MarkTechPost