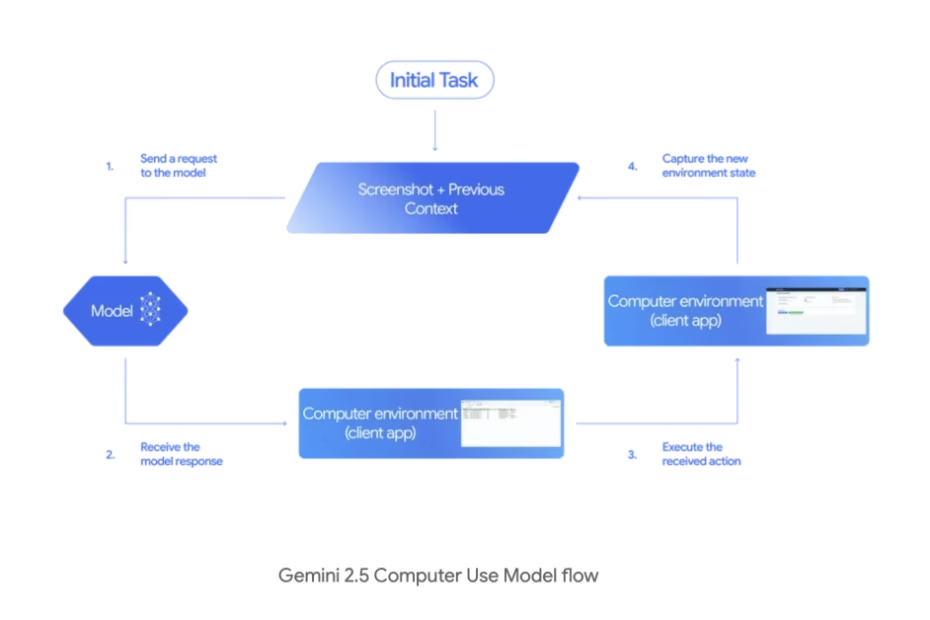

Google AI Introduces Gemini 2.5 ‘Computer Use’ (Preview): A Browser-Control Model to Power AI Agents to Interact with User Interfaces Michal Sutter Artificial Intelligence Category – MarkTechPost

[[{“value”:” Which of your browser workflows would you delegate today if an agent could plan and execute predefined UI actions? Google AI introduces Gemini 2.5 Computer Use, a specialized variant of Gemini 2.5 that plans and executes real UI actions in a live browser via… Read More »Google AI Introduces Gemini 2.5 ‘Computer Use’ (Preview): A Browser-Control Model to Power AI Agents to Interact with User Interfaces Michal Sutter Artificial Intelligence Category – MarkTechPost